Listen now

The buzz around AI infrastructure has long centered on training large-scale models, but as generative AI moves into real-world applications, inference is rapidly taking center stage. AI inference is the process where a trained model is put to work, generating predictions or decisions from new, real-world data. Inference—the phase where models come to life in production environments — demands more than just compute power. It must balance latency, cost-efficiency, realism, and sustainability. And increasingly, it's becoming the true bottleneck in delivering seamless AI experiences.

One of the key trends shaping the AI infrastructure landscape today is the bifurcation between AI training and inference. While training large foundation models grabs headlines for its sheer compute scale, inference, especially at scale, has begun to dominate discussions due to its impact on both performance and energy consumption. The cumulative energy required for inference often surpasses that of the model’s training lifecycle—making it a critical focus area for sustainable AI.

The complexity of AI inference workloads

Currently NVIDIA’s GPUs are general purpose, meaning they don’t differentiate between training and inference workloads. Given the massive parallelism requirements of AI inference, GPUs remain the dominant platform of choice. Inference typically involves two distinct phases:

- Prefill phase – Understanding and computing the prompt

- Decode phase – Generating tokens based on the computed data

These two phases have opposing GPU utilization patterns. The prefill phase tends to saturate GPU utilization, while the decode phase often underutilizes GPUs due to high memory bandwidth requirements. This imbalance results in suboptimal GPU usage—often hovering around just 10%—which drives up costs per token and increases latency.

Given the diversity of real-world applications that involve both deep learning and non-deep-learning tasks, addressing inference challenges requires a holistic, end-to-end approach. Here’s how the industry is addressing it:

1. Hybrid CPU-GPU approaches

One method to reduce GPU strain is offloading parts of the inference workload to CPUs (Central Processing Units). While CPUs are suitable for less compute-intensive tasks, the latency introduced by switching between CPU and GPU can be a limiting factor, especially for real-time applications. This approach is best suited for batch processing and workloads where latency tolerance is acceptable.

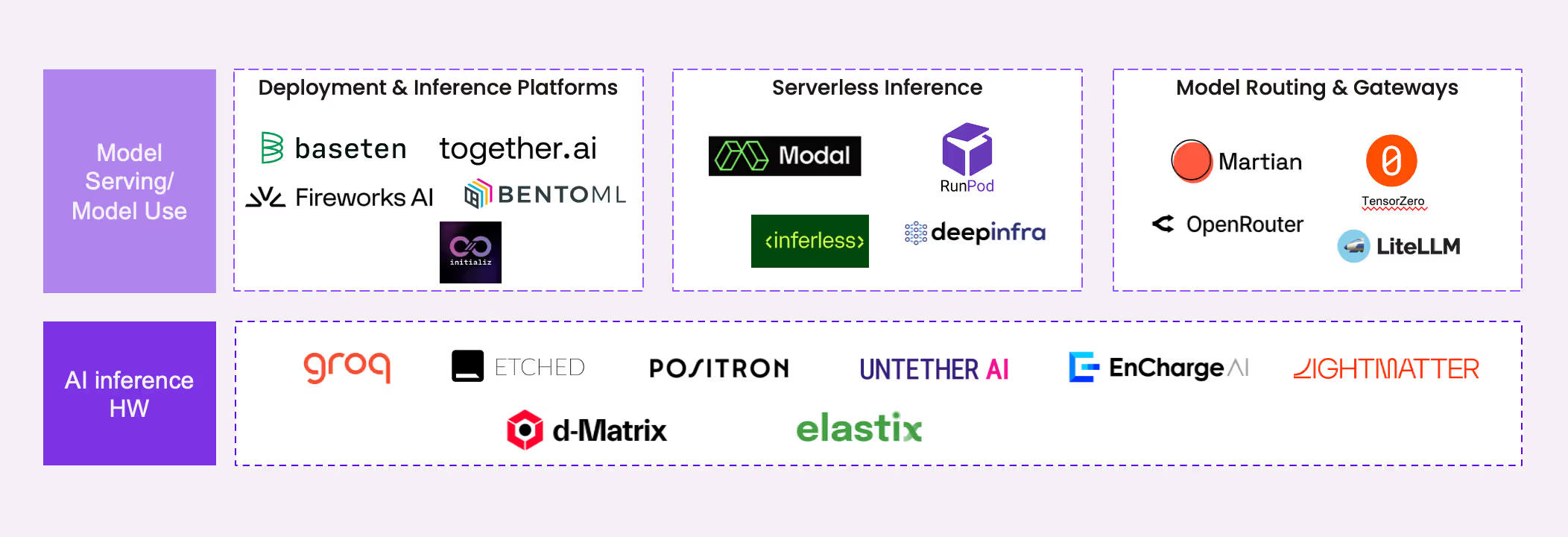

2. Specialized AI inference chips

• If you strip down a chip to its essentials, it consists of a) computation units and b) memory units. Inference bottlenecks arise not from slow computation but from memory bandwidth constraints – the so called memory wall problem. To address this a new generation of chips have emerged: General-purpose inference chips: Groq, d-Matrix

• Transformer-optimized chips: Etched

• In-memory compute chips: Encharge AI, Untether AI, LightMatter

• Model optimization + FPGA (Field-Programmable Gate Arrays) solutions: Positron, ElastiX

Each of these categories targets unique aspects of the inference challenge, signaling a move away from one-size-fits-all hardware solutions.

3. Software-based optimization

Hardware isn’t the only frontier. At the software layer, major gains are being made in model serving and optimization. Examples of these include approaches such as:

• Speculative decoding: Allows for predicting multiple tokens in advance, enabling parallel processing.

• Custom attention mechanisms: Help reduce memory bottlenecks in transformer models.

• Quantization: Lowers the precision of calculations, improving efficiency with minimal impact on accuracy.

Why this matters now

As more AI applications move from the lab into production, inference becomes the gateway to delivering on the promise of generative AI. Latency, cost, and sustainability are no longer side conversations—they’re make-or-break factors for product success.

The road ahead will likely involve a tailored mix of hardware and software innovations. What’s clear is this: inference is no longer the silent sibling of AI training. It's the main event.

__

At NGP Capital, we’re eager to back the builders rethinking AI infrastructure for the age of inference. As generative models move from prototypes to products, inference becomes the performance layer that defines user experience, economics, and scalability.

Startups that solve for this layer—whether through custom silicon, optimized systems, or novel software—will define the next wave of AI-native platforms.

If you're building toward this future, let’s connect: divya@ngpcap.com!

.svg)

.svg)