Listen now

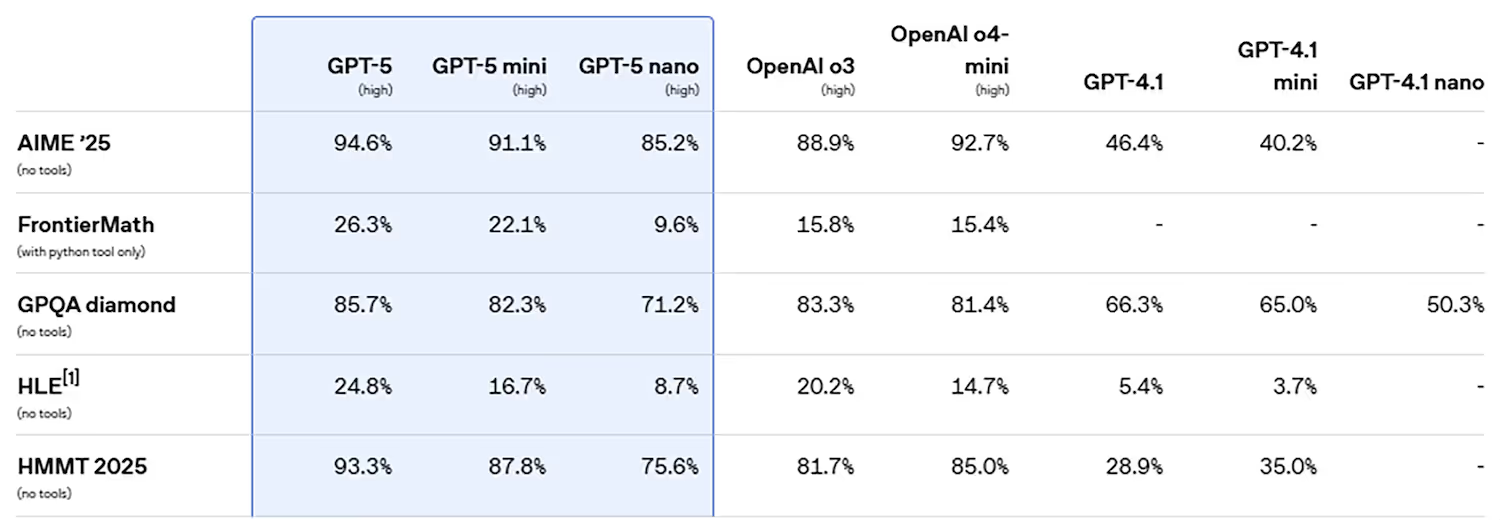

Have we already reached the point of diminishing return for AI scaling laws? Should we rethink the brute-force approach where larger models, more training data, and greater compute will translate to better performance? The ChatGPT-5 launch was clearly a reality check. Yes, it’s better—especially at coding and the cost-latency tradeoffs—but the leap many expected felt more like a step, and benchmarks reveal a similar story.

The AI scaling law is brutal where parameters, data, and compute must grow exponentially for performance to climb linearly. In VC terms, it’s similar to CAC—great until marginal dollars chase the same users at exponentially higher cost. Escaping the spiral requires a new playbook. For AI, the fix isn’t more horsepower but smarter design: constrain the problem, encode human judgement, and focus on post-training techniques that teach models when and how to act. Task-specific models, reinforcement learning, and agentic tools turn raw potential into reliable performance. The edge now comes from how you train and evaluate, not how large your base model is.

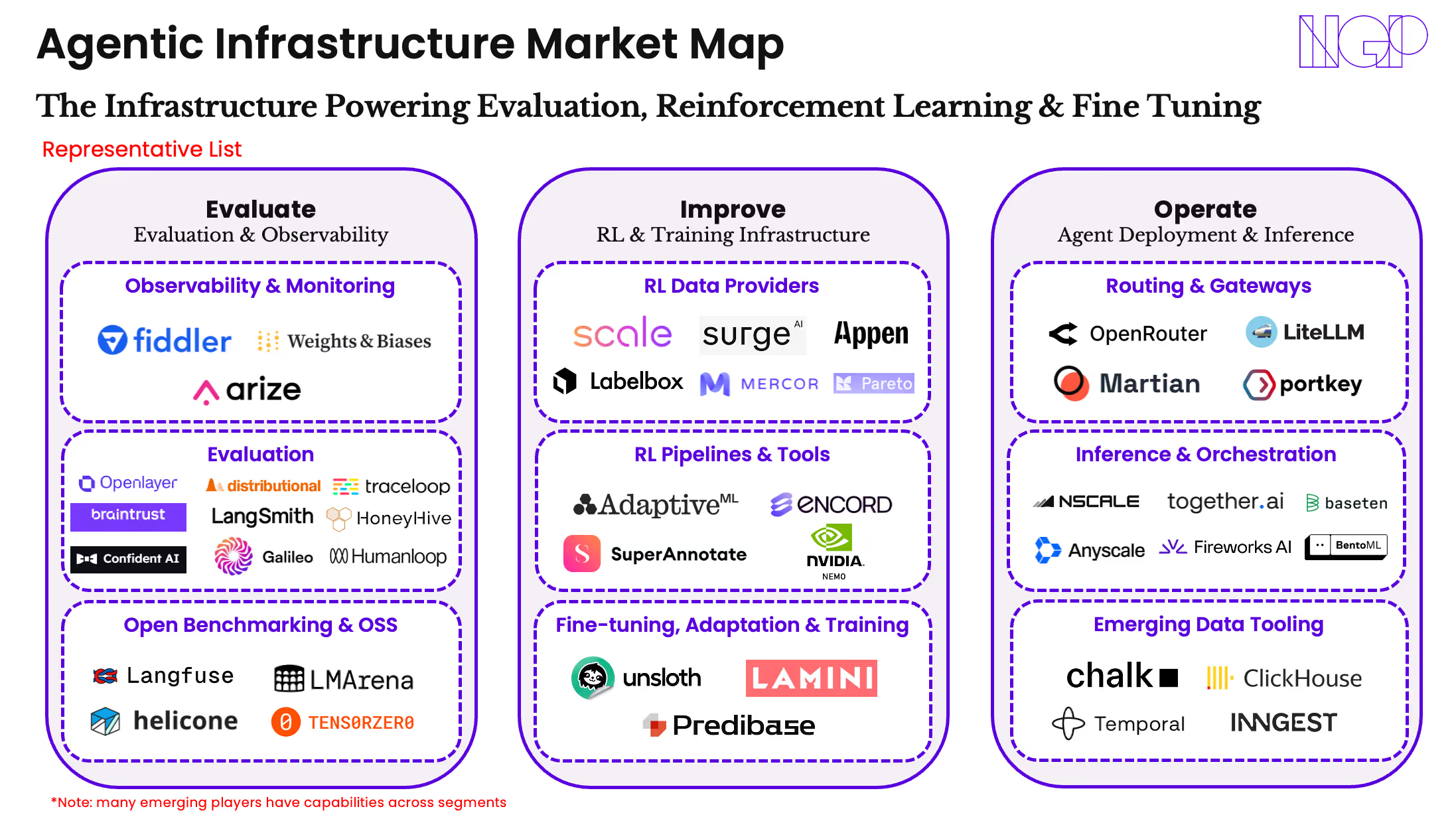

So, where’s the action now? In the post-training and evaluation stack. AI-first startups need complementary tooling that can capture granular model performance and feed reinforcement learning (RL). RL has become the most common post-training technique that rewards correct output and penalizes mistakes, teaching models a clear playbook to get the highest total score over time. Evals are the repeatable tests that grade AI on the tasks you care about—turning accuracy, reasoning, speed, and costs into scores that can be both tracked and improved.

LLMs are probabilistic and trained to reward confident guessing, which is why hallucination shows up like feature, not a bug. RL grounds behavior by making your evals your objective function. Yes, this approach is compute-hungry (>100x according to Jensen Huang), but the tradeoff is control and reliability on increasingly complex tasks.

Product teams should strive to build a concrete framework for scoring each step in the agentic workflow. This transforms black-box output into smaller steps of explainable (and auditable) results. The emerging “LLM-as-a-judge” approach of using a network of models/agents for autonomously orchestrating evals is the practical holy grail. However, before we get there, the next competitive frontier is EvalOps and democratizing fine-tuning techniques. The winners over the next 12 months will shape reasoning models into industry-specific, workflow-aware performance.

This shift is already rippling into fundamental science. In August, MIT published in Cell that they had developed a novel antibiotic designed by a foundation model that targets drug-resistant bacteria and validated in mice. This breakthrough is just one recent example of reasoning + verification loops creating original candidates rather than remixing the past—a clear proof point of what happens when we aim today’s models at the most challenging problems and reward them with the right objective function.

Why do we care about this example? Because we’ve crossed a threshold. If models can now rival the most proficient humans at abstract reasoning—even in scientific domains—the bottleneck isn’t raw capability anymore. It’s form-fitting that capability to specific industries and workflows through fine-tuning and evals.

Follow the money. Mercor’s historic surge to a >$10B valuation on the back of the fastest growth in history—from 0 to $500M run rate in just 17 months—validates the market opportunity across both large enterprises and venture-backed startups. RL and evaluation data pipelines are the new foundry layer of AI. This isn’t about “labels” anymore. What buyers are really securing is a reliable pipeline of calibrated human feedback—preference signals, process traces, and governed golden sets that fuel RL and anchor model performance in production. Whether or not you buy every headline number, the direction is clear. EvalOps is no longer a cost center, it’s the differentiator. The teams that secure the highest-quality feedback loops will compound faster on performance, margins, and market share.

Put simply, the era of bigger-is-better is giving way to smarter-is-measured. With reasoning now good enough to outperform humans on most tasks, winners will be the teams that (1) train for process—via RL and tool use—and (2) measure what matters, with live, domain-specific evals that map cleanly to business outcomes. The deeper your evals, the deeper your moat.

The EvalOps playbook for all AI founders:

Govern your golden sets: Golden sets are small, human-validated examples of “what good looks like” in the workflow. Save versions of these golden sets, track which scenarios are governed, set a simple target like “pass@K” (right within K tries, e.g. pass@3), and make sure human reviewers agree with each other before giving the output a score.

Own a portfolio of evals: Don’t test just one step. Keep a small set of tests (“golden sets”) that cover:

- Capability: can it do the task (e.g., solve a billing question)?

- Process-aware: did it take the right step (used the right tool, in the right order)?

- Robustness: does it hold up when prompts change or are intentionally tricky?

- UI/UX: is it helpful, fast, and not prone to hallucination?

Track all of this at the target speed and cost and continuously refresh the tests so they stay “fresh,” not memorized.

Ship with live testing: Prove it in stages: Offline (lab tests) → Shadow (reads production but doesn’t answer) → Canary (small % of users) → GA (everyone). Decide the success criteria before launch and use A/B tests tied to business KPIs (e.g., resolution rate / cost / latency).

Close the RL loop and maintain simplicity: Feed your eval results back into training (RL from human feedback or AI feedback). When the task doesn’t have a single “correct” answer, use process reward models (PRMs) to break down the task into smaller components and score each step in the workflow. Every tool (search, code runner, CRM lookup) expands the model’s choices and adds complexity. Begin with the fewest tools that are necessary for the task and introduce new tools only if they create tangible performance gains.

Instrument per segment: Build simple dashboards by user type, domain, and use case. Turn on alerts when quality drops or when your test scores drift from their normal range.

Founder checklist:

- Own your tests: Do you have task-relevant golden sets you update weekly (not just public leaderboards)?

- Score the process: Are you grading steps and tool use, not just the final answer?

- Show clear ROI: Can you prove value on accuracy, speed, and cost in terms an enterprise buyer cares about?

- Build a scalable feedback pipeline: Can you collect/use feedback at scale while continuing to provide quality checks on your scoring mechanism? Aim for more automation over time (data capture → labeling → retraining).

- Reward what customers value: Is your reward design aligned to real outcomes (verifiers/PRMs), not a proxy metric from a paper?

Conclusion

As we move into 2026, startups no longer need the largest model to win their category, they need the clearest objective. In the RL era, that objective is encoded in your evals. Own the verifiers where you can, build PRMs where you can’t, and measure performance against transparent, customer-centric KPIs.That’s how you transform reasoning into customer ROI and, ultimately, defensibility.

At NGP Capital, this is why we’re excited to back founders building vertical, agentic automation—those applying the latest advances in RL, fine-tuning, and EvalOps to specific industries, from manufacturing and robotics to geospatial intelligence and cybersecurity. The pattern we’re seeing across winning approaches is clear. The moat doesn’t come from raw scale, but from aligning intelligent systems to real workflows and measurable outcomes.

If you’re a founder building vertical intelligence into enterprise workflows, I would love to chat—reach out to eric@ngpcap.com!

.svg)

.svg)